James Pethokoukis is on a mission to show that rising income inequality isn’t really a big deal. The big gun in his arsenal is a 2009 paper by Robert Gordon, which says:

This paper shows that the rise in American inequality has been exaggerated in at least three senses. First, the conventional measure showing a large gap between growth of median real household income and of productivity greatly overstates the increase compared to a conceptually consistent alternative gap concept, which increases at only one-tenth the rate of the conventional gap between 1979 and 2007….Second, the increase of inequality is not a steady ongoing process; after widening most rapidly between 1981 and 1993, the growth of inequality reversed itself and became negative during 2000-2007.

Pethokoukis, responding to a CJR piece by Ryan Chittum, says: “Chittum, nor other liberal economic pundits such as Ezra Klein, Jonathan Chait, Kevin Drum, Ryan Avent, have made an effort to dispute Gordon, hardly a conservative economist. Liberals don’t even like quoting that above bit.”

Gordon is a good economist, and I haven’t made an effort to dispute him because I don’t really dispute most of what he says. I just think it’s largely irrelevant. Let’s take the various claims in his paper one at a time:

-

Comparing income growth to productivity growth is a bad way of demonstrating the sluggishness of middle-class wages. Fine. I rarely do this, because I happen to agree that

it’s problematic. But this has nothing to do with the existence of income inequality itself. For that, all you need to do is compare the incomes of the poor and the rich over time.

it’s problematic. But this has nothing to do with the existence of income inequality itself. For that, all you need to do is compare the incomes of the poor and the rich over time.

-

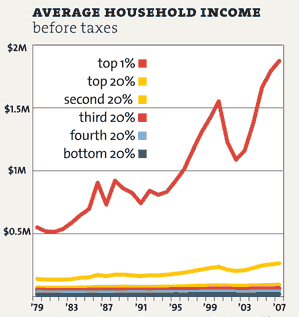

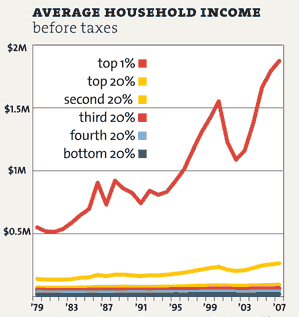

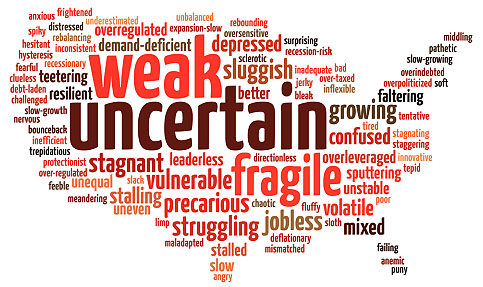

Income inequality didn’t grow between 2000-2007. Yes, but those dates are egregiously cherry-picked. The dotcom bubble reached its height in 2000 (see chart at right), which meant that the income of the very rich also peaked that year. It fell during the dotcom bust and then started increasing again around 2003. It fell again during the financial crisis of 2008, and then started rising again within a year. If you look at a graph of the top 1%, you see peaks and valleys because their income is fairly volatile. But you also see a secular rise over the past three decades that shows no real signs of stopping.

-

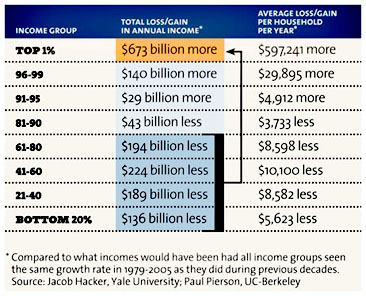

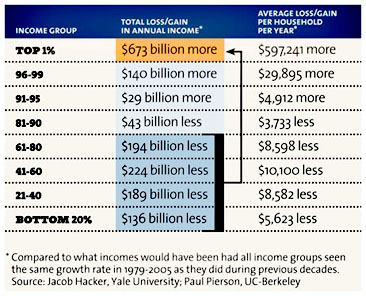

Income inequality is mostly a phenomenon of the top 1%. This is absolutely true. The top 10% have done well over the past 30 years and the top 20% have done OK. Nothing spectacular though. The real

action has been elsewhere: an enormous movement of income from the bottom 80% to the top 1% (see table at right). I’m not sure why Pethokoukis thinks this is evidence against the growth of income inequality, though. In fact, it’s evidence that it’s even worse than you think.

action has been elsewhere: an enormous movement of income from the bottom 80% to the top 1% (see table at right). I’m not sure why Pethokoukis thinks this is evidence against the growth of income inequality, though. In fact, it’s evidence that it’s even worse than you think.

-

Inflation has been lower for the poor than the rich. I’m not qualified to judge this, but who cares anyway? If it’s true, it might be good news for the poor, though it depends a lot on just why inflation rates for the poor are lower. If it’s because cheap goods have gotten better and cheaper, that’s great. But if it’s because the poor have been forced to switch to ever crappier goods over time, that’s not so great. In any case, this is mostly useful if you’re interested in evaluating the lived experience of the poor. That’s a fine topic, and one that deserves study. But if you’re interested in income inequality, it’s irrelevant. In that case, you just want to know how the private economy is allocating income to various classes of people, and the answer is pretty simple: over the past 30 years, less and less is going to the poor and middle class and more and more is going to the well-off and the rich.

Jon Chait has a somewhat more epic response here. The nickel version, though, is that Gordon’s paper does say that income inequality has increased dramatically over the past three decades.1 He just has some caveats to the data. But while those caveats are interesting, none of them change the fact that the rich have hoovered up a vastly disproportionate and increasing amount of America’s income growth since the mid-70s. There’s just no getting away from that simple raw reality.

UPDATE: Matt O’Brien tweets: “I talked to Robert Gordon here. He was flabbergasted his work was being used to argue inequality is a myth.” Here’s more:

Consider the research and writing of Robert Gordon, a professor of social sciences at Northwestern University. He has done pioneering work questioning the extent of the aforementioned gap between productivity and median wages—work that Pethokoukis misappropriates to claim that income gains have been shared “fairly equally.” Gordon found that the productivity gap may be about a tenth the size as what is commonly thought, but, as he told me, that doesn’t negate the story about runaway wealth at the top of the income distribution. “The evidence on the long-term increase of inequality within the bottom 99 percent is ambiguous and complex, but what stands out like a searchlight is the unprecedented and increasing inequality between the bottom 99 percent and the top 1 percent,” Gordon told me.

There’s more at the link on Pethokoukis’s other claims.

1Here’s the direct quote: “The evidence is incontrovertible that American income inequality has increased in the United States since the 1970s.”

vanguard of Stalinesque Big Government rules decreeing exactly what all of us will and won’t be allowed to eat in the future. And that’s just for promoting the idea of better nutrition and more exercise for kids!

vanguard of Stalinesque Big Government rules decreeing exactly what all of us will and won’t be allowed to eat in the future. And that’s just for promoting the idea of better nutrition and more exercise for kids!

it’s problematic. But this has nothing to do with the existence of income inequality itself. For that, all you need to do is compare the incomes of the poor and the rich over time.

it’s problematic. But this has nothing to do with the existence of income inequality itself. For that, all you need to do is compare the incomes of the poor and the rich over time. action has been elsewhere: an enormous movement of income from the bottom 80% to the top 1% (see table at right). I’m not sure why Pethokoukis thinks this is evidence against the growth of income inequality, though. In fact, it’s evidence that it’s even worse than you think.

action has been elsewhere: an enormous movement of income from the bottom 80% to the top 1% (see table at right). I’m not sure why Pethokoukis thinks this is evidence against the growth of income inequality, though. In fact, it’s evidence that it’s even worse than you think.

left-leaning constituencies (students, minorities, the poor) to vote. We’re finally starting to see the mainstream media pay some attention to this lately, and today David Savage puts it front and center in the LA Times.

left-leaning constituencies (students, minorities, the poor) to vote. We’re finally starting to see the mainstream media pay some attention to this lately, and today David Savage puts it front and center in the LA Times.

financial trouble and probably won’t be there for my parents

financial trouble and probably won’t be there for my parents