Joseph Nye recently wrote an op-ed complaining that academic political scientists have become too cloistered. He’d like them to get out more, work on real-world problems, and take positions in government where they can apply their skills and knowledge.

Over at Dan Drezner’s site, Georgetown’s Raj M. Desai and James Raymond Vreeland fire back, and among other things they say this:

Nye complains about the methodological rigor in contemporary political science as an impediment to its relevance. This is ironic, given that it is precisely this rigor that has allowed modern political science to improve its forecasting power — something that is presumably vital to policymaking. We now have better statistical tools to predict, for example, the likelihood of state failure, civil conflict, democratic breakdown, and other changes in governments. Game-theoretic models can be used to analyze trade disputes and war, as well as the behavior of international organizations, terrorist movements, and nuclear states with greater precision and clarity than just a few decades before.

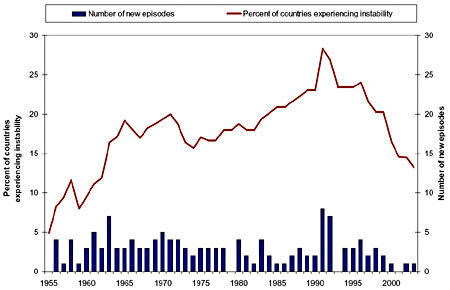

Really? Modern political science has improved its forecasting power? I didn’t have the energy to click on all the links above, but I did click on the first one,  which took me to the website of PITF, the Political Instability Task Force. And sure enough, a 2005 paper titled “A Global Forecasting Model of Political Instability” claimed to be able to predict state failure with a surprisingly simple model:

which took me to the website of PITF, the Political Instability Task Force. And sure enough, a 2005 paper titled “A Global Forecasting Model of Political Instability” claimed to be able to predict state failure with a surprisingly simple model:

Because the onset of instability is a complex process with diverse causal pathways, we originally expected that no simple model would have much success in identifying the factors associated with the onset of such crises….It was to our considerable surprise that these expectations turned out to be wrong.

….To give but one example, we found early on that lower-income countries showed a higher risk of instability. This is one of the best-established results in the conflict literature, of course, so we sought to improve on it. We not only tried substituting such other standard of living indicators as infant mortality, calories consumed per capita, nutritional status, percent living in poverty, life expectancy, and others for real per capita income, we also tried using a basket of quality-of-life indicators in a neural model that we hoped would provide a far more sophisticated and accurate specification of how income affected instability. Yet no model, no matter how complex, performed significantly better than models that simply used infant mortality (logged and normalized) as a single indicator of standard of living.

….What did matter?….As we have said, to our surprise, a quite simple model without interaction terms and with few nonlinearities is consistently over 80% accurate in distinguishing countries that experienced instability two years hence from those that remained stable.

The model essentially has only four independent variables: regime type, infant mortality [], a “bad neighborhood” indicator flagging cases with four or more bordering states embroiled in armed civil or ethnic conflict, and the presence or absence of state-led discrimination.

Interesting! Maybe. But aside from technical questions about whether this model really works (where’s the annual prediction of which countries are going to implode within the next 24 months?), does this demonstrate that Nye was right or wrong? PITF was government sponsored in the first place, so you’d think its academic results would now be in use at the State Department? Are they? And if not, why not?