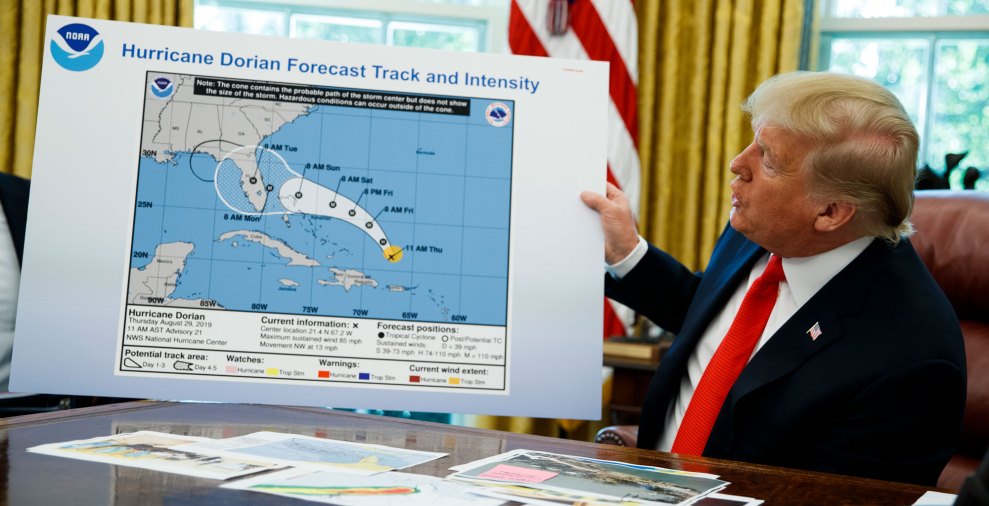

Don't mind that giant internet-connected recording device on your kitchen table.Rodrigo Reyes Marin/AFLO via ZUMA Press

Update (5/25/2018): An Amazon spokesperson responded to Mother Jones late Thursday night.

Echo woke up due to a word in background conversation sounding like “Alexa.” Then, the subsequent conversation was heard as a “send message” request. At which point, Alexa said out loud “To whom?” At which point, the background conversation was interpreted as a name in the customer’s contact list. Alexa then asked out loud, “[contact name], right?” Alexa then interpreted background conversation as “right”. As unlikely as this string of events is, we are evaluating options to make this case even less likely.

In case you needed another reminder that Amazon’s Echo, an internet-connected recording device designed to listen and respond to verbal commands, can pose security and privacy risks for you and your loved ones, here you go.

A family in Portland, Oregon contacted the company recently to ask it to investigate why the device had recorded private conversations in their home and sent the audio to a person in another state.

The family did as told, after the employee told them about receiving an audio file containing what seemed like a private conversation. At first the family did not believe the employee, but then the employee was able to relay details of the private conversation. “My husband and I would joke and say I’d be these devices are listening to what we’re saying,” a woman named Danielle, who didn’t want her last name used, told KIRO-TV in Portland. She explained that a couple of weeks ago one of her husband’s employees called them and told them “unplug your Alexa devices right now…you’re being hacked.”

“I felt invaded…I said ‘I’m never plugging that device in again, because I can’t trust it,” she added.

Amazon did not immediately respond to a Mother Jones request for comment. But Danielle told KIRO-TV that after repeatedly calling Amazon, an engineer “investigated” and confirmed that the device had sent messages to someone on her husband’s contact list. “He apologized like 15 times in a matter of 30 minutes,” she said, adding that “this is something we need to fix.” The engineer did not provide details as to what happened, but said that “the device just guessed what we were saying.”

The company told KIRO-TV in a statement that it takes privacy seriously and that what happened to Danielle “was an extremely rare occurrence.” The company offered to “de-provision” the device so the family could still use its Smart Home features, Danielle said, but she’d rather get a refund, “which [Amazon has] been unwilling to do,” she told the TV station.

This is just the latest example of creepy behavior from Alexa, the name of the voice Echos talk through. Late last month security researchers disclosed they had found a bug that allowed them to get the device to send transcripts of conversations it recorded to outside parties. (Amazon has said it has fixed the vulnerability.) In March, the company said it would reprogram the devices after owners complained their Alexas were emitting a creepy or sinister laugh.

Danielle and her family’s experience highlights privacy risks intrinsic to audio-enabled home assistants. “Mobile phones, computer webcams and now, digital assistants also can be co-opted for nefarious purposes,” Wired’s Gerald Sauer wrote in 2017 in a piece about Alexa recordings being used as part of a murder investigation. “These are not potential listening devices. They are listening devices—that’s why they exist.”