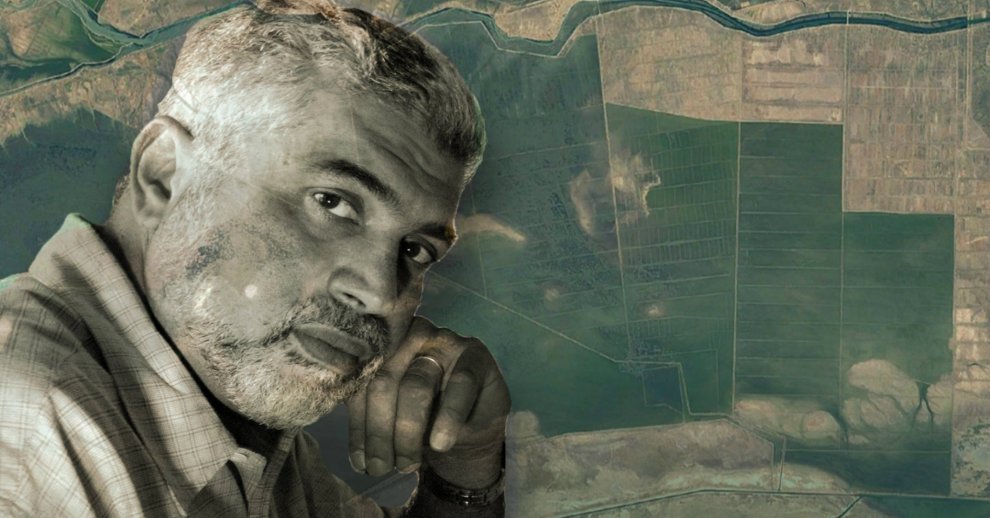

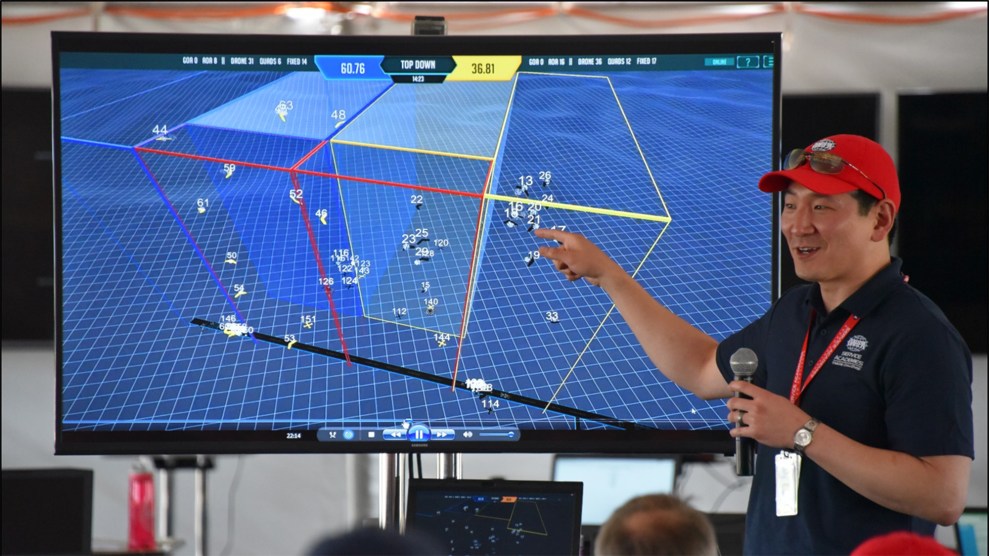

OFFSET (OFFensive Swarm-Enabled Tactics) program manager Timothy ChungDARPA

Last week, the Defense Advanced Research Projects Agency (DARPA), the Defense Department’s R&D arm, announced the next phase of its OFFSET (OFFensive Swarm-Enabled Tactics program, which seeks to “improve force protection, firepower, precision effects, and intelligence, surveillance, and reconnaissance (ISR) capabilities.” In a techspeak-laden video, program manager Timothy Chung cheerfully encourages robot developers to pitch their ideas for “disruptive new capabilities” that “scale to provide exponential advantage.” In other words: Develop groups of small and increasingly autonomous aerial or ground robots that could one day be deployed on the battlefield.

This isn’t just Black Mirror-style fantasy: The military has already tested self-governing robotic fleets with colorful names like LOCUST (Low-Cost UAV Swarming Technology). And now it’s looking to expand their capabilities, “allowing the warfighter to outmaneuver our adversaries in…complex urban environments”—that is, the places where actual people live in the countries where US forces operates. Participants in DARPA’s newly announced “swarm sprint” are supposed to envision “utilizing a diverse swarm of 50 air and ground robots to isolate an urban objective within an area of two city blocks over a mission duration of 15 to 30 minutes.”

What does that actually mean? Building on DARPA’s script, Fox News commentator and defense specialist Allison Barrie envisions this scenario: “Say you have a bomb maker responsible for killing a busload of children, our military will release 50 robots—a mix of ground robots and flying drones…Their objective? They must isolate the target within a 2 square city blocks within 15 to 30 minutes max.” Swarms, she writes, will “significantly augment the firepower a team can bring to a fight.”

DARPA has not said that swarms will be weaponized or capable of attacking human targets. Yet recent history suggests that this is not beyond the realm of possibility. Robot swarms are descended in part from unmanned aerial vehicles, the most infamous of which is the Predator drone. What started as a surveillance gadget rapidly evolved into a lethal remote-controlled weapon under President George W. Bush before becoming President Barack Obama’s weapon of choice. At last count, according to the Bureau of Investigative Journalism, American drone strikes have killed up to 1,500 civilians, nearly 350 of them children.

Asked recently about military robots making life-or-death decisions, DARPA Director Steven Walker told the Hill that there is “a pretty clear DOD policy that says we’re not going to put a machine in charge of making that decision.”

Yet in advance of meetings on lethal autonomous weapons being held next week at the United Nations in Geneva, the United States has drafted a working paper about the technology’s “humanitarian benefits.” It argues against “trying to stigmatize or ban such emerging technologies in the area of lethal autonomous weapon systems” in favor of “innovation that furthers the objectives and purposes of the Convention [on Certain Conventional Weapons].” That convention, also known as the Inhumane Weapons Convention, blocks the use of weapons found to “cause unnecessary or unjustifiable suffering to combatants or to affect civilians indiscriminately.”

Robotics and tech experts have sounded the alarm that artificially intelligent, killer robots are becoming uncomfortably close to reality. “When it comes to warfare, this is something that needs to be addressed before it’s too late,” says Mary Wareham, the global coordinator of the Campaign to Stop Killer Robots, which is seeking to preemptively ban fully autonomous weapons. “It’s a scary future.”